How Computers Got Fast

In April 2022, Epic Games announced the release of its new game engine, Unreal Engine 5. This engine was groundbreaking in how it was able to render ever increasingly realistic worlds with models of millions of polygons and fully dynamic lighting, all in real-time. As impressive as this is from a software perspective, it would never have been possible were it not for the last decades of advances in computer hardware. In the last decades, computer performance has increased exponentially, and although in recent years this curve has flattened, we still see new chips being developed which are more powerful than last year’s models.

The first home computer which introduced a graphical user interface, the Apple Macintosh released in 1979, had a clock speed of 6 MHz, 128 KB of RAM, and a cost equivalent of €6300 in today’s currency. Compared to a modern computer, you could buy a laptop with 8 GB of RAM and a clock speed in the GHz without spending more than a thousand euros. As you can see, computers have not only become significantly faster but also a lot cheaper.

In this article, we will have a look at how some computers have become more powerful, and how this allowed us to perform much larger tasks. We will look at how computer components have improved, how the architecture of CPUs has allowed us to do more tasks at the same time, and how specialized components like GPUs allow us to massively increase the performance of certain tasks.

Moore’s law and advances in component design

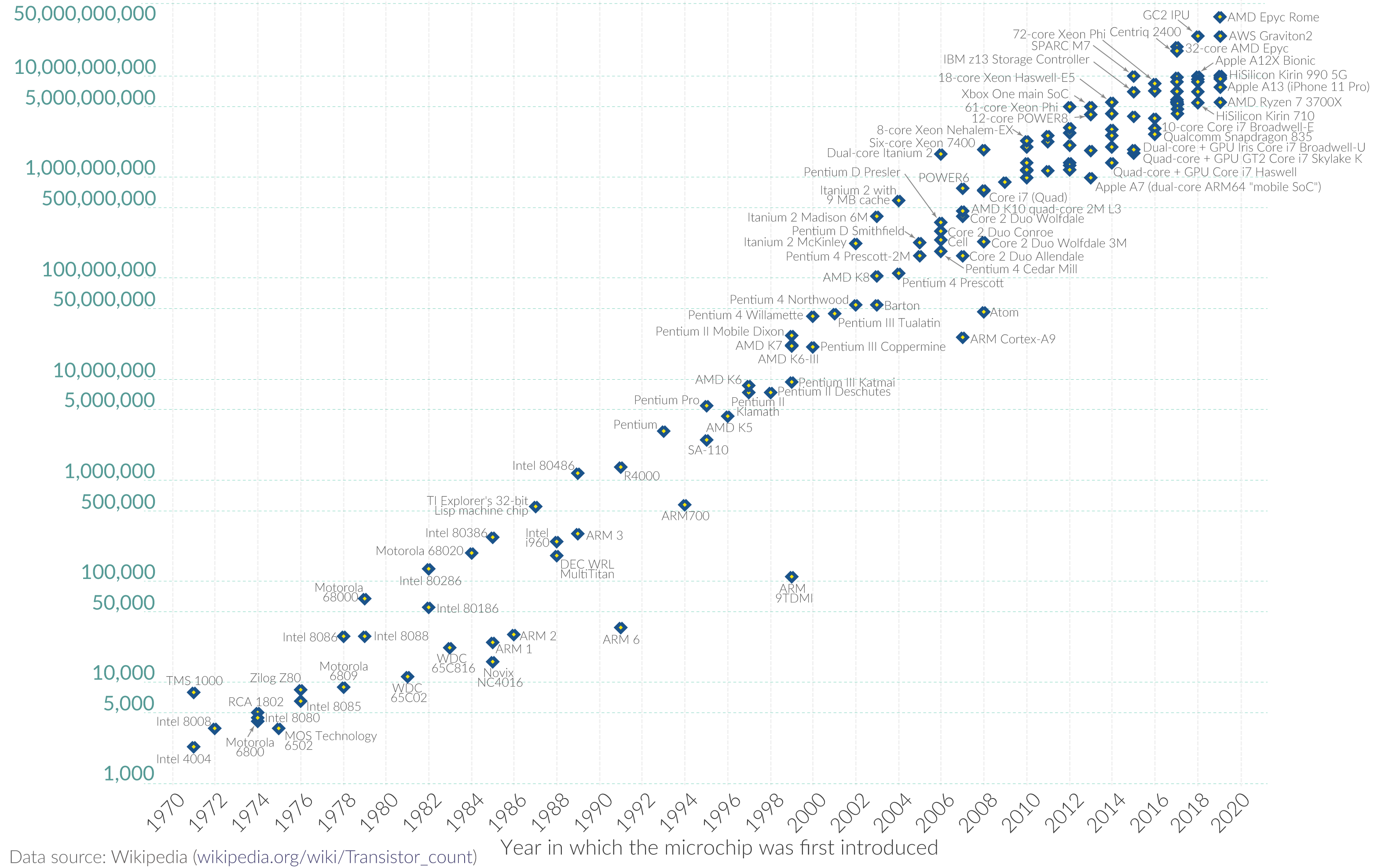

When discussing the increase in performance in modern computers, it is inevitable that the concept of Moore’s law is brought up. Moore’s law, which is an observation described in 1965 by George Moore who was the co-founder of Intel, states that the number of transistors on an integrated circuit will roughly double each year. In 1975, he changed his prediction such that it doubles every two years, and this prediction has been quite accurate for more than 40 years [1], as shown in Figure 1.

One of the ways in which we have been able to fit more transistors in a chip is that the process of scaling down the size of a transistor has also improved exponentially. In 1971, commercially produced transistors were in the range of 10 micrometres. In 2016, the size of a transistor has been shrunk down to the range of 10 nanometres, a thousand times smaller than the ones produced in 1971.

One of the largest technologies which allow Moore’s law to work is the invention of deep ultraviolet (UV) excimer laser photolithography. Invented around the 1980s by Kanti Jain, this technique uses lasers with UV light that etch the pattern of the integrated circuit onto a silicon wafer. With modern techniques using UV light with shorter wavelengths, the technique allows us to create smaller patterns, thus resulting in smaller transistors.

Instead of making the components smaller, modern CPUs also gain more performance by increasing the number of cores on each chip. In the early days of computers, the CPU only contained one core on which only a single thread could be executed. By increasing the number of cores on a chip, the CPU can execute multiple tasks at the same time. With modern operating systems, a lot of processes can run concurrently, such as background tasks, internet browsers, etc. Modern Intel processors also contain the ability for a single core to keep track of two threads, which can increase the amount of computing power as well. This process is called hyper-threading and has been implemented for the first time in 2002 on the (Intel) Xeon CPU. When a section of the core is not being used by one of the threads, the other thread can use that section instead, which increases the total performance of the core.

GPUs, Quake and ChatGPT

One important component that was developed in the history of computers was the Graphical Processing Unit (GPU). The Graphical Processing Unit is a component that is designed mainly for computer graphics and image processing. A GPU consists of a large number of small cores that are able to execute code in parallel. As such, any computation task that consists of a large number of parallel computations can be executed on a GPU, although most GPUs are still optimized for computer graphics and video transcoding. Even though the GPU is a separate component in most computers, each CPU contains a small integrated graphics processor of its own.

Graphics processors in the 20th century were mainly developed for displaying graphics efficiently. In the 1970s, arcade cabinets were already using specialized chips for displaying the screen. Halfway through the 1980s, GPUs were becoming available for PCs which mainly focused on accelerating 2D graphics and allowing for an increased resolution, which was then a maximum of 640x480 pixels.

In the 1990s, when video games started to emulate the 3rd dimension, GPUs allowed games to show an even larger amount of graphical fidelity, as can be seen with the video game Quake (Figure 2) where the resolution could be increased massively, as well as the quality of the images, with the GPU allowing a 16-bit colour resolution instead of an 8-bit one.

As mentioned before, any problem with a large number of parallel computations can be executed efficiently on a GPU. Therefore, GPUs are very popular for mining cryptocurrency and the training of large neural networks or other machine learning techniques. Machine learning libraries such as TensorFlow allow the user to train their model using GPU acceleration. One big example of this is the training of the language model ChatGPT. The underlying model GPT-3, which consists of 175 billion parameters, has been trained using 10,000 GPUs. Not only does this show the immense amount of computing power that modern supercomputers are able to perform, but it also highlights the fact that the amount of parallelisation allows the developers to scale the number of devices, instead of needing to speed up all processors.

A long way from the start

As we have seen, the improvements in technology, manufacturing processes and the design of specialized components have allowed us to create computers which are capable of processing massive amounts of data and performing significant calculations. In the last decades, computers have become exponentially more powerful by the decrease of components’ size and the parallelization into different cores. Although Moore’s law has been accurate for decades, transistor sizes have been approaching the physical limit. CPU performance is still increasing, but hardly at the same pace as 10 years ago.

Luckily, there are still alternatives to improve the power of computers. Technologies such as the GPU are still improving the ability of computers to perform parallel tasks, and our increased ability to distribute computing powers over a large number of computers, also known as cloud computing, allows us a separate way to scale up our processing power. The previously mentioned training of ChatGPT-3 would have taken over 350 years if it would only be executed on a single computer, according to Lambda Labs [2].

In more than 40 years, we have seen incredible improvements in both the power and costs of computers. Even the phones in our pockets are much faster than computers from 10 years ago. Because of this, and because of the great level of research and optimization into the software and hardware, we have programs with incredible graphical fidelity such as what has been shown by Unreal Engine 5.