From Turing to ChatGPT: The Smartest Text Generator in the World

The quest of building Artificial Intelligence, or intelligent machinery, is in my opinion very interesting, because it brings us as humanity closer to the question of what it means to be intelligent. But what does intelligent machinery, or the more suitable term, “intelligent computers”, mean?

Intelligent Computers

In order to answer this question, I am going to make the parallel between the human computer of the early 20th century and the modern digital computer. “The idea behind the digital computer is that it can perform any operation that a human computer can”, is the description of the digital computer as given by Alan Turing in “Computing Machinery and Intelligence” (1950). Using this definition, our next question would be: “What could a human computer do according to Turing?” I assume it would have been calculations like: “compute the Taylor polynomial of sin(x-1)” or similar repetitive arithmetic. Anyway, the nature of the tasks given to a human computer was repetitive, boring and arithmetic. So, if the digital computer was designed to mimic the human computer, the nature of the tasks given to the digital computer may, for the sake of this analogy, be said to be repetitive, boring and arithmetic.

Limits of Human Computers

So, now I ask again: What does Artificial Intelligence mean? What is the goal? I am going to answer this question by answering the question: “What does an “intelligent human computer” mean?

Well, let me first state what I do not think an “intelligent human computer” is: we cannot expect the human to go beyond the limits of its natural processing and storage abilities in order to calculate ever more bigger things, like the Taylor polynomial of sin(x-1) + cos(x^2) – tan(x^3), faster and faster. At a certain point, the human will have reached his maximum calculating performance. However, what would aid us as the instructor would be for example that we could go to higher levels of abstraction with our instructions, as we educate our human computer. (I don't know exactly how human computers were used back in time and how they used specific calculations, these are all very broad assumptions to take the parallelism forward.) For example, instead of dividing our specific need into small chunks, one of these chunks being: “compute the Taylor polynomial of sin(x-1)”, we could simply instruct it to “find a function that has the same trajectory as sin(x-1)”. The computer would now need to be able to know that a Taylor polynomial would be a suitable way to calculate the answer to the above question. So, summarizing, making a human computer more intelligent would mean that we could give him/her ever more abstract instructions, keeping in mind that the nature of these tasks is repetitive and boring, which would require us to educate him/her. (There are likely multiple flaws in this parallelism and consequent reasoning, however it is meant as a thought-experiment.) Similarly, this could hold for the digital computer. Now that we have an understanding of what it is we want to do with our intelligent computer, our task is to find a way to educate it in order to suit our needs.

Abstraction on abstraction

If the next question that arose in you was similar to: “What are the possibilities of these ever more abstract instructions? Where does it end?”, I am with you. But from the definition above, it seems unlikely that an instruction would be: “paint a masterly, realistic landscape”, because this task does not seem very repetitive, boring or arithmetic.

If the goal of AI can be phrased as: “attempting to go ever levels of abstraction higher in instructing the computer”, then OpenAI’s ChatGPT, which I am going to talk more about in the following part of this article, does a very good job at it.

ChatGPT

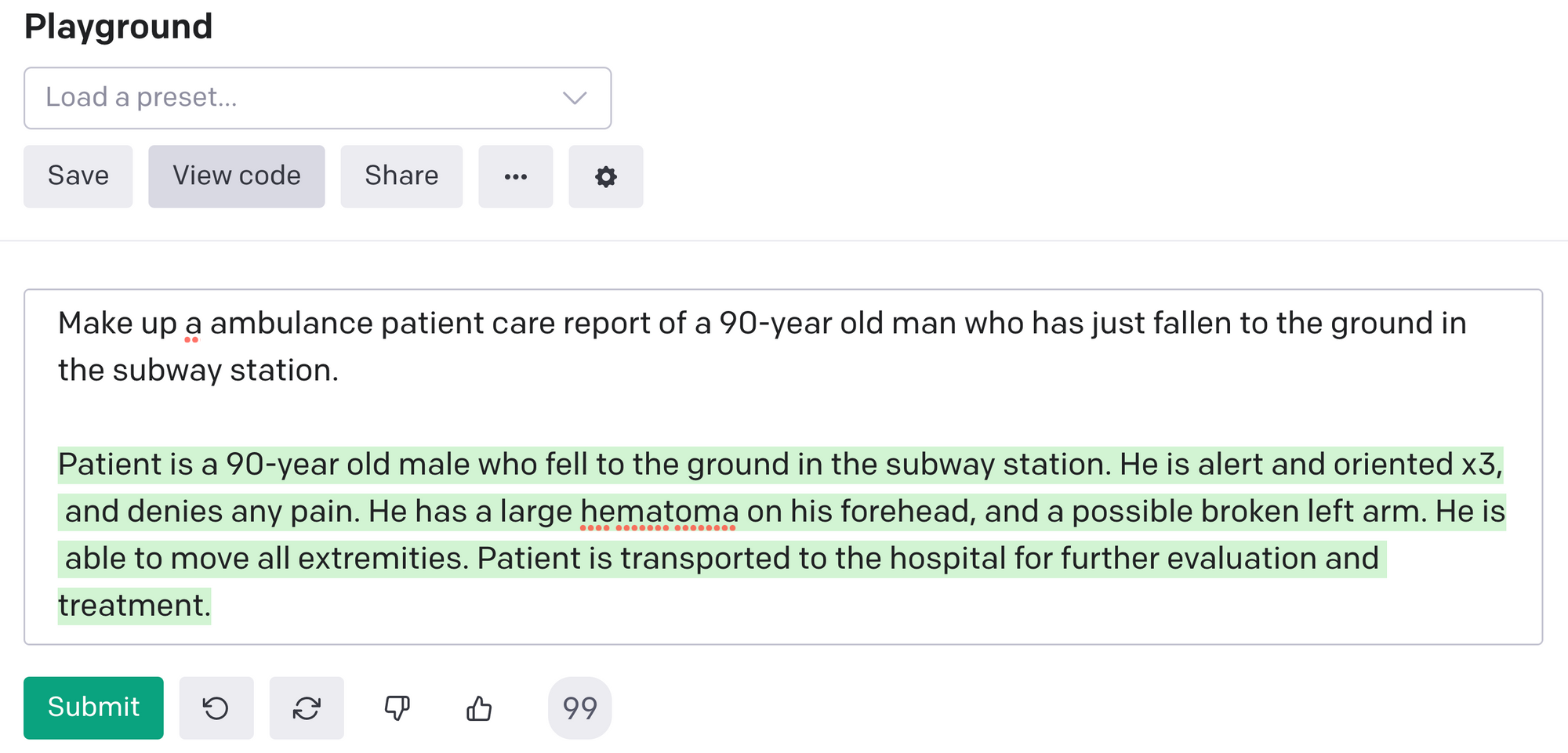

Some time ago, one of my friends told me about this company, founded by Elon Musk, that researches and produces language models, which has to do with probability. Their latest version, (Chat)GPT-3, is, if you ask me, a novelty. At a low level, I would not be able to explain what GPT-3 does, other than that it predicts next words, trying to continue the input text you give it. At a high level, it can generate very realistic English and code. Furthermore, it is very easy to use: you open up the so-called “Playground”, which is just an input prompt, and select your desired length and randomness (and some other parameters you can play around with). For example, if you ask ChatGPT to write a report for a patient, it returns a realistic report as shown in Figure 1.

Writing Code

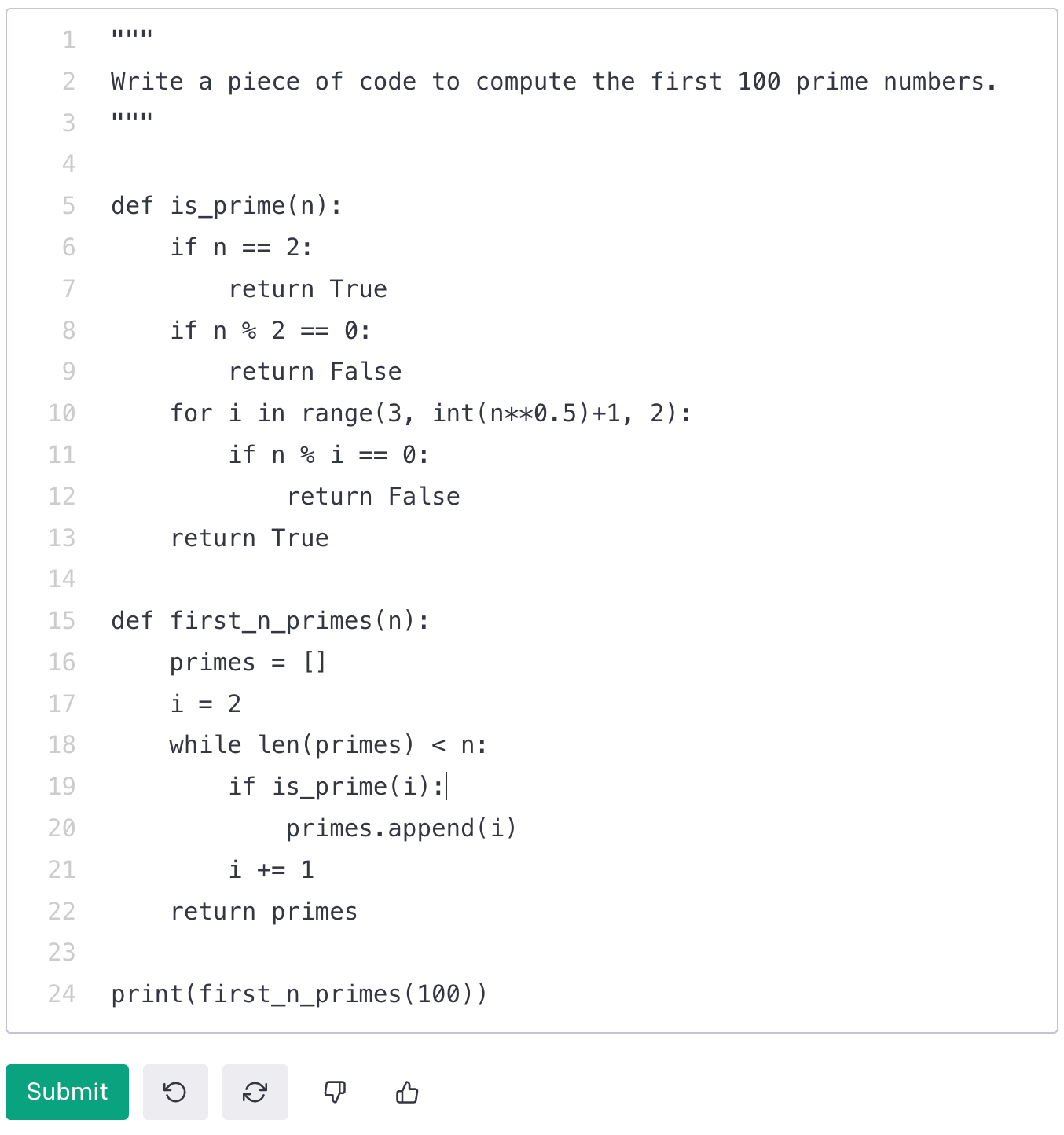

In addition, ChatGPT can produce pieces of code in a lot of different languages. For example, it can seamlessly produce the following code from the instruction “Write a piece of code to compute the first 100 prime numbers”.

Impressive, to say the least. First off, the model was able to interpret the assignment. Secondly, it was able to produce an astonishingly realistic narrative piece of text or code. So, all in all, ChatGPT seems to be a great leap forward in natural language processing and its ability to generate realistic sentence structures is a powerful tool for future research and development.

Conclusion

Going back to the goal of AI posed earlier, is it of any use that we can generate realistic text and code? Is it not the goal for the computer to understand our instructions, not necessarily to imagine and create itself? But then on the other hand, how could we ever know the computer understands our abstract instructions if it will not be able to respond adequately?

About Alan Turing

Having read some of the papers of Alan Turing, I admire his attempt to explain his often difficult ideas in simple words, including the idea of the imitation game (or Turing test). Although the goal of this thought experiment is still not 100% clear to me, it is quite clear that he was ahead of his time in terms of thinking about AI and what it could do: one of the things that stands out to me most was that he wrote something along the lines of “Building AI will not necessarily require faster and more powerful computers than there are now (referring to the 1950’s), it is all in the lines of code.”

To explain this idea, he makes the analogy to a writer writing a story: it is not the paper that needs to be altered, but the meaning of the words. If that idea is true, it makes coding into sort of an artform that is basically limitless and has a huge impact on the future of humanity.

Alan Turing’s life ended tragically, however he left behind ideas that are interesting to explore for everyone interested in the fundamental questions regarding computers and AI, especially given that he was at the frontier of these fields.

A more in-depth biography of Alan Turing is given in the article ‘The Disgraced Father’.